Hallucination is not an unexpected phenomenon. While LLMs are continuously improving, they are hardly a “one size fits all” solution. It’s hardly fair to expect LLMs to know, understand, and do everything. This is where specialized solutions can fill in the gaps. One effective way of creating such a solution is through RAG. RAG (Retrieval-Augmented Generation) systems provide LLMs with additional context—the secret sauce that anchors AI firmly in reality. In this article, we explain how RAG systems can transform businesses.

What Exactly is RAG?

Imagine having a brilliant assistant who knows exactly where to look for accurate information whenever they’re asked a question. Instead of guessing or making something up, they swiftly pull facts straight from trusted, verified resources before formulating their response. These verified resources are usually industry- and business-specific sources that provide your organization’s context to the AI model. That’s RAG in a nutshell.

Retrieval & Pre-Processing

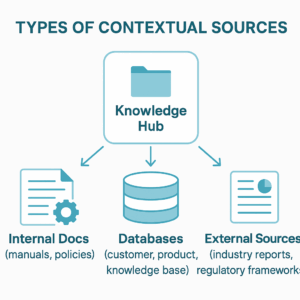

The R part of RAG refers to retrieval and pre-processing of additional data, primarily pulling relevant context from large, reliable datasets. These datasets can be both external and internal, such as web pages, databases, and knowledge bases. All this data gets pre-processed so it is easily digestible by the system.

Generation

The pre-processed data is then incorporated into the LLM of choice. This improves the LLM’s context, providing it with a more comprehensive understanding of the topic. Equipped with this additional context, the LLM can deliver better, more accurate responses.

Context: The Cure to AI’s Hallucinations

As we explained before, AI “hallucinations” can provide the user with a completely incorrect answer without the user knowing it. For business-critical operations, these mistakes can range from mildly amusing to catastrophically risky.

Context is the antidote. By using verified, contextual sources as its knowledge base, a RAG-based AI:

- Grounds every answer in documented fact.

- Reduces errors dramatically.

- Maintains a clear audit trail of the sources behind its responses.

Essentially, RAG ensures your AI assistant is always speaking from a place of certainty, not guesswork.

How Setting Up RAG Actually Works (Simplified!)

Implementing RAG doesn’t require turning your IT department upside down. Here’s how straightforward it can be:

- Prepare Your Knowledge Base: Curate and index your existing internal documentation, databases, manuals, or relevant external resources.

- Link to AI: Connect this knowledge base directly to your AI model.

- Retrieve and Generate: When prompted, your AI pulls precise information from your knowledge base to construct a well-informed response.

- Iterate and Optimize: Continuously refine your knowledge base, ensuring it’s current and accurate, enhancing your AI’s effectiveness over time.

The setup can be streamlined, and tools like LangChain, Pinecone, and Weaviate make integrating RAG systems intuitive and achievable even for teams new to advanced AI.

Why You Should Start Today

RAG isn’t merely about accuracy; RAG systems can transform businesses. They elevate your AI capabilities from limited and unstructured to a genuinely powerful, mission-critical asset.

These systems provide a substantial competitive advantage. Faster, accurate insights give your business a tangible edge in decision-making and customer interactions. For technically advanced companies, providing additional context can enable the LLM to crack codes or foster innovation.

Moreover, with businesses increasingly relying on AI-driven solutions, early adopters of RAG will set the pace, defining industry standards and expectations.

A final, often overlooked benefit of RAG systems is risk reduction. Without providing employees with context-specific tools, they might continue using general-purpose AI without fully understanding its risks.

Getting Grounded with AI

Your company’s future with AI doesn’t need to involve worrying about fabricated facts or misleading statements. By embracing RAG, you’re not just adopting another technology, you’re embedding truth, trust, and transparency into your AI journey.

Ready to take the next step in trustworthy AI? It’s time to ground your business reality with RAG.